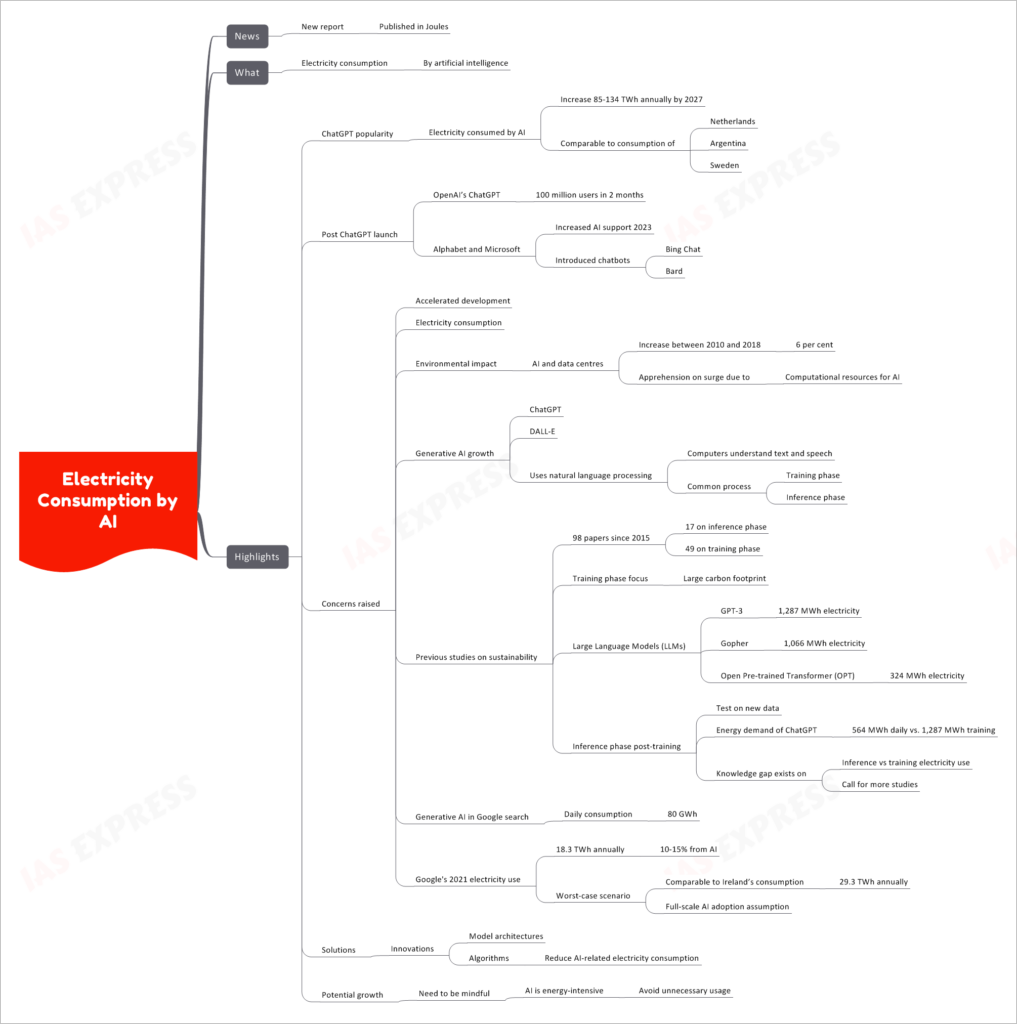

Electricity Consumption by AI

A new report published in Joules has shed light on the growing electricity consumption by artificial intelligence (AI) systems, raising concerns about their environmental impact.

The Report and Its Findings

Electricity Consumption by AI

- According to the report, the electricity consumption by AI is set to increase significantly by 2027.

- The projected increase ranges from 85 to 134 terawatt-hours (TWh) annually.

- This consumption is comparable to the electricity usage of entire countries like the Netherlands, Argentina, and Sweden.

Post ChatGPT Launch

- OpenAI’s ChatGPT, a popular AI language model, gained 100 million users within just two months of its launch.

- Companies like Alphabet and Microsoft have increased their support for AI in 2023 and introduced chatbots like Bing Chat and Bard.

Concerns Raised

- Accelerated development in AI has led to increased electricity consumption, raising environmental concerns.

- AI and data centers have already witnessed a 6 percent increase in electricity usage between 2010 and 2018.

- Concerns arise due to the surge in computational resources required for AI.

Generative AI Growth

- Generative AI models like ChatGPT and DALL-E use natural language processing to understand text and speech.

- AI training involves two phases: training and inference.

- Previous studies highlight the large carbon footprint of the training phase.

- Large Language Models (LLMs) like GPT-3 and Gopher consume significant electricity during training.

- There’s a knowledge gap regarding the electricity usage during inference vs. training, necessitating more research.

Generative AI in Google Search

- Google’s use of generative AI in search consumes approximately 80 gigawatt-hours (GWh) daily.

- Google’s total electricity usage in 2021 was 18.3 TWh annually, with AI accounting for 10-15%.

- In a worst-case scenario, AI’s consumption could be comparable to Ireland’s annual electricity usage, which is 29.3 TWh, assuming full-scale AI adoption.

Solutions

- Innovations in model architectures and algorithms are being explored to reduce AI-related electricity consumption.

- Efficient AI development practices are crucial to mitigate the environmental impact.

Potential Growth

- While AI offers tremendous potential, it’s important to be mindful of its energy-intensive nature.

- Reducing unnecessary AI usage and optimizing algorithms can contribute to a more sustainable future.

If you like this post, please share your feedback in the comments section below so that we will upload more posts like this.